Generative AI is rapidly changing industries, helping with things like creating content and analyzing data. However, with great power comes great responsibility. Governing generative AI is crucial to harness its potential while minimizing risks. This blog explores the essentials of AI governance, focusing on the dos and don’ts, regulatory bodies in India, governing principles, techniques, and the best approaches to regulation.

The Dos and Don’ts of Regulating AI

Do's

-

Promote Transparency:

Make sure AI systems clearly show how they make decisions. This helps build trust and keeps the system accountable. -

Prioritize Ethics:

Set up rules to handle issues like bias, privacy, and fairness in AI. Review and update these rules regularly. -

Engage Stakeholders:

Include various people, like tech experts, ethicists, and the public, in discussions about how AI should be used and managed. -

Encourage Innovation:

Regulations should not stop new ideas from developing. Create policies that support research and development while keeping safety and ethics in mind. -

Focus on Education:

Teach AI developers and users about ethical practices and responsible use of AI.

Don’ts

- Avoid Overregulation: Too many rules can slow down progress and technological growth. Find a balance.

- Ignore Bias: Failing to address biases in AI can lead to unfair outcomes and harm society.

- Disregard Privacy: Not protecting user data and privacy can damage trust and lead to legal problems.

- Neglect Accountability: AI systems should have a clear responsibility to prevent misuse and ensure they follow the rules.

Who Regulates AI in India?

In India, AI regulation is shaped by several key bodies and policies:

1. NITI Aayog

-

National Artificial Intelligence Strategy:

NITI Aayog is a major policy think tank that created a comprehensive strategy to boost AI research and development in India. The strategy focuses on promoting AI in healthcare, agriculture, education, smart cities, and infrastructure.

2. Ministry of Electronics and Information Technology (MeitY)

-

Principles for Responsible AI:

MeitY has developed principles to ensure AI systems are ethical, transparent, and accountable. These principles guide the development and deployment of AI to prevent bias and promote fairness.

3. Data Protection Authorities

-

Draft National Data Governance Framework Policy:

This policy aims to improve data governance, focusing on data privacy and protection. It sets guidelines for data sharing and management, ensuring that personal data is handled responsibly. -

Digital Personal Data Protection (DPDP) Act:

This act focuses on safeguarding personal data, emphasizing consent, and ensuring individuals have control over their data. It plays a crucial role in regulating how AI systems handle personal information.

What Governs AI?

AI governance employs various techniques to ensure responsible usage:

-

Legal Frameworks:

Laws and regulations for data protection, cybersecurity, and AI deployment guide legal compliance. -

Ethical Guidelines:

Principles focusing on fairness, accountability, and transparency shape the ethical landscape of AI. -

Technical Standards:

Standards ensure interoperability, safety, and reliability of AI systems.

What are the techniques of AI governance?

- Algorithm Audits: Regular audits of AI systems help identify biases and inaccuracies in algorithms.

- Impact Assessments: Evaluating the potential impact of AI applications on society and individuals helps mitigate risks.

- Ethics Committees: Establishing ethics committees to review AI projects ensures alignment with ethical standards.

- Transparency Reports: Publishing reports on AI system operations and decision-making processes promotes accountability.

- Feedback Mechanisms: Implementing channels for user and stakeholder feedback aids continuous improvement and adaptation.

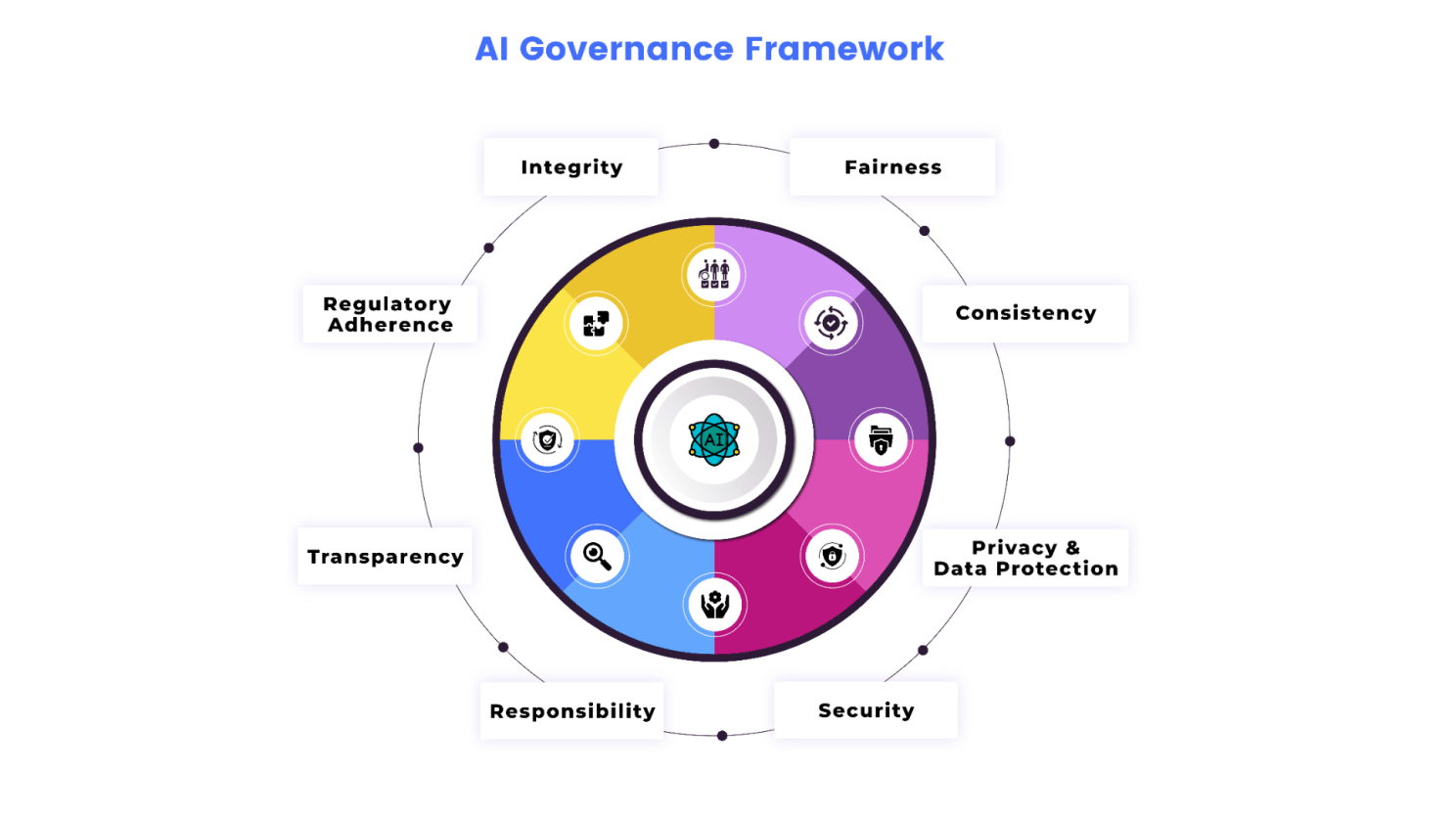

AI Governance Framework

The AI Governance Framework helps manage AI responsibly as technology advances quickly. It’s important to use AI ethically as it becomes more common in daily life. This framework guides us in handling both the challenges and benefits of AI.

By following these principles, organizations can use AI’s benefits and reduce risks, making sure technology helps everyone.

- Integrity: AI systems must operate with honesty and uphold ethical standards.

- Fairness: Ensures AI treats all users equitably, eliminating bias and discrimination.

- Consistency: AI decisions should be reliable and consistent across different scenarios.

- Privacy & Data Protection: Safeguarding personal information is crucial, adhering to data protection laws.

- Security: Protects AI systems from vulnerabilities and cyber threats.

- Responsibility: Clearly defines accountability for AI actions, ensuring human oversight.

- Transparency: AI processes should be understandable and explainable to users.

- Regulatory Adherence: Compliance with legal and industry standards is mandatory to avoid legal issues.

For more tips on using AI in business effectively, read AI Navigator: A Leader’s Guide To Exponential Growth Using AI on Kindle. This book provides strategies to grow with AI while following governance rules.

Learn about our LMS for Government – Government-LMS

What is the Right Way to Regulate AI?

Regulating AI effectively involves a balanced approach:

- 1. Adaptive Regulation: Develop flexible regulations that can evolve with technological advancements, allowing for innovation while ensuring safety.

- 2. International Collaboration: Collaborate with international bodies to create consistent global standards and address cross-border challenges.

- 3. Risk-Based Approach: Focus on regulating high-risk AI applications more strictly, while allowing low-risk innovations more freedom.

- 4. Public Engagement: Foster open dialogue with the public to understand concerns and expectations, integrating them into policy development.

- 5. Continuous Monitoring: Regularly review and update regulations to keep pace with AI developments and emerging ethical issues.

Governing generative AI is a complex but essential task that requires a collaborative effort from policymakers, industry leaders, and the public. By following ethical guidelines, engaging stakeholders, and implementing adaptive regulations, we can ensure that AI serves humanity positively and equitably. As we move forward, the key lies in striking a balance between fostering innovation and safeguarding ethical standards, ultimately guiding us toward a future where AI benefits all